Back to all research projects

Rethinking Crowdsourcing

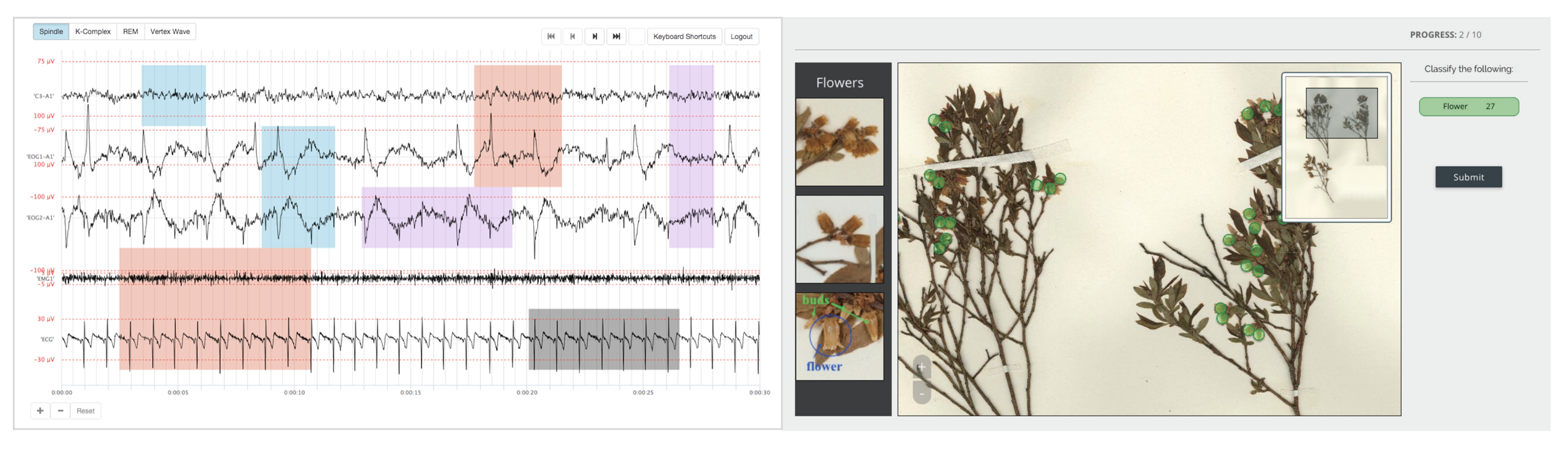

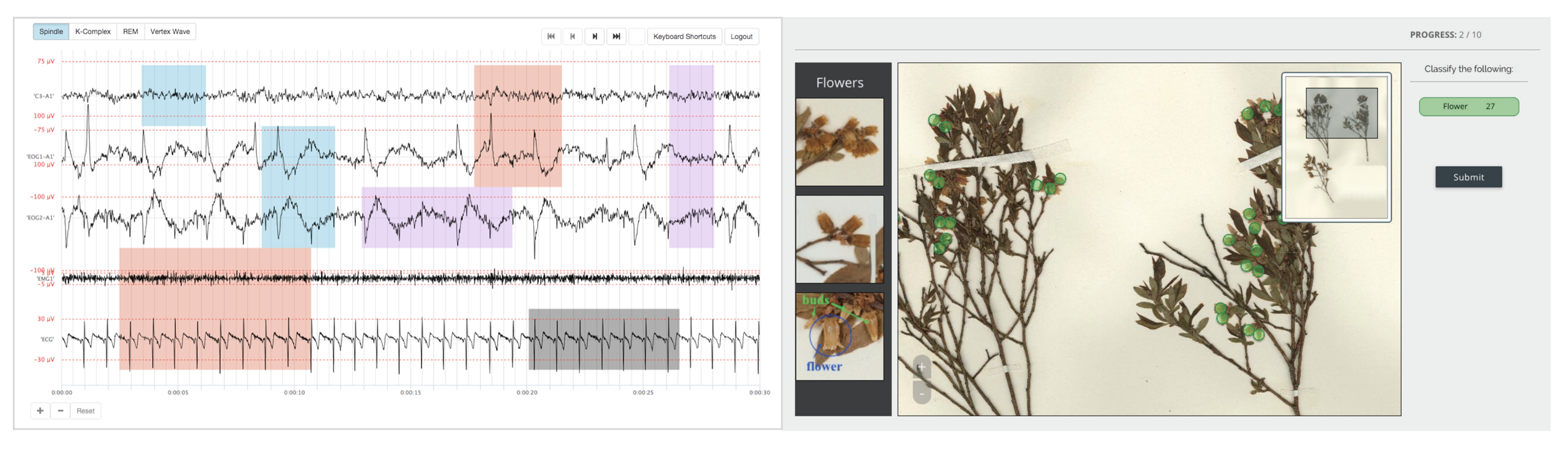

Our research on crowdsourcing aims to broaden the types of tasks that crowdsourcing systems can handle by developing new interfaces that enhance people’s ability to perform complex, unfamiliar, or ambiguous tasks. The premise is that we can achieve quality, efficiency or worker satisfaction outcomes by designing meaningful interactions between novices, experts and algorithms. We have developed crowdsourcing platforms that target scientific and medical tasks. In one of the projects, we developed CrowdEEG, a platform that enables medical specialists to collaboratively annotate sleep EEG data, and demonstrate that the deliberation process can improve output quality and efficiency. Our work is also increasingly concerned with understanding crowdwork practices, such as tooling practices and how they affect workers’ mental health and fair payment in collaborative tasks.

Publications

M. Schaekermann, G. Beaton, E. Sanoubari, A. Lim, K. Larson and E. Law. Ambiguity-aware AI Assistants for Medical Data Analysis. In CHI 2020.

A. Williams, G. Mark, K. Milland, E. Lank and E. Law. The Perpetual Work Life of Crowdworkers: How Tooling Practices Increase Fragmentation in Crowdwork. In CSCW 2019.

M. Schaekermann, G. Beaton*, M. Habib, A. Lim, K. Larson and E. Law. Understanding Expert Disagreement in Medical Data Analysis through Structured Adjudication. In CSCW 2019.

M. Schaekermann, J. Goh, K. Larson and E. Law. "Resolvable vs. Irresolvable Disagreement: A Study on Worker Deliberation in CrowdWork." In CSCW 2018. * Best Paper Award

W. Callaghan, J. Goh, M. Mohareb, A. Lim and E. Law. "MechanicalHeart: A Human-Machine Framework for the Classification of Phonocardiograms." In CSCW 2018.

Cartwright, M., Seals, A., Salamon, J., Williams, A., Mikloska, S., MacConnell, D., Law, E., Bello, J.P., Nov, O. "Seeing Sound: Investigating the Effects of Visualizations and Complexity on Crowdsourced Audio Annotations." In Proceedings of the ACM on Human-Computer Interaction, vol. 1(1): Computer-Supported Cooperative Work and Social Computing 2017, 2017.

A. Williams, J. Goh, C. Willis, A. Ellison, J. Brusuelas, C. Davis and E. Law. Deja Vu: Characterizing Worker Reliability Using Task Consistency. To appear in AAAI Conference on Human Computation and Crowdsourcing (HCOMP), 2017.

C. Willis, E. Law, A. Williams, B. Franzone, R. Bernardos, L. Bruno, C. Hopkins, C. Schorn, E. Weber, D. Park and C. Davis. CrowdCurio: an online crowdsourcing platform to facilitate climate change studies using herbarium specimens. In New Phytologist, 2017. (The definitive version is available here)